Adaptive Non-Uniform Quantization for CSI Compression

AIML-based Channel State Information (CSI) compression that uses autoencoders to lower the overhead of feedback on the MIMO channel information from the UE to the NW has been an active study in 3GPP RAN1 Release-18. One of the discussion topics for the CSI compression is on the quantization of the compressed CSI at the UE-side and dequantization at the NW-side. The two main options considered are quantization-aware models, where the trained model includes quantization, and quantization-non-aware models, where quantization is handled separately.

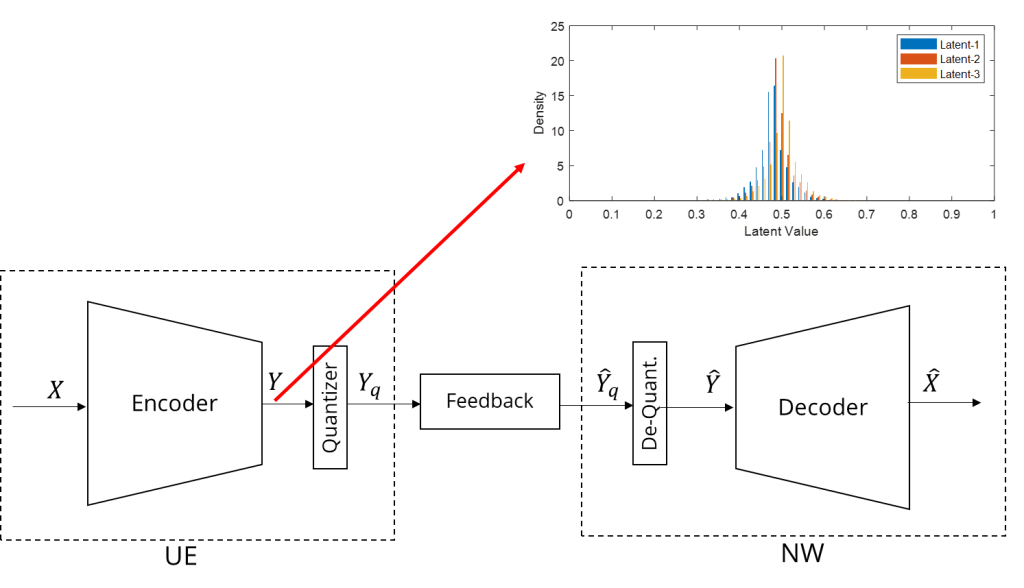

We focus on improving the performance of quantization-non-aware models by introducing adaptive non-uniform quantization of the CSI compression (i.e., for the output of CSI encoder). Based on our observation that the latent variables follow a non-uniform distribution, as shown in Figure 1, we have proposed and evaluated two different adaptive non-uniform quantization methods, clustering-based and cumulative distribution function (CDF)-based. Additionally, the distribution of latent variables shows different statistics among different latent variables. Therefore, we have further proposed and evaluated non-uniform quantization considering per-latent and across-all-latent distributions.

Figure 1: Quantization for CSI compression

The results show that non-uniform quantization designed according to the CSI encoder output improves the squared generalized cosine similarity (SGCS) performance significantly compared to uniform quantization, as depicted in Figure 2. The results further show that SGCS for per-latent quantization is always higher than the across-all-latent quantization counterpart due to the differences in the distribution of latent values, however this may incur additional overhead. Clustering and CDF-based non-uniform quantization shows similar SGCS performance where clustering-based method slightly outperforms the other. In summary, we showed that quantization-aware models have the potential to provide better results in terms of SGCS while lacking generalization on payload size. On the other hand, quantization-non-aware models have the potential on payload size generalization while lagging on SGCS performance.

Figure 2: SGCS performance of quantization methods